The word progressive is used a badge of honor by some and a means of attack by others in modern politics. But to be progressive meant something different in earlier times. Here, Joseph Larsen tells us about a new book on the subject: Illiberal Reformers: Race, Eugenics and American Economics in the Progressive Era, by Thomas C. Leonard.

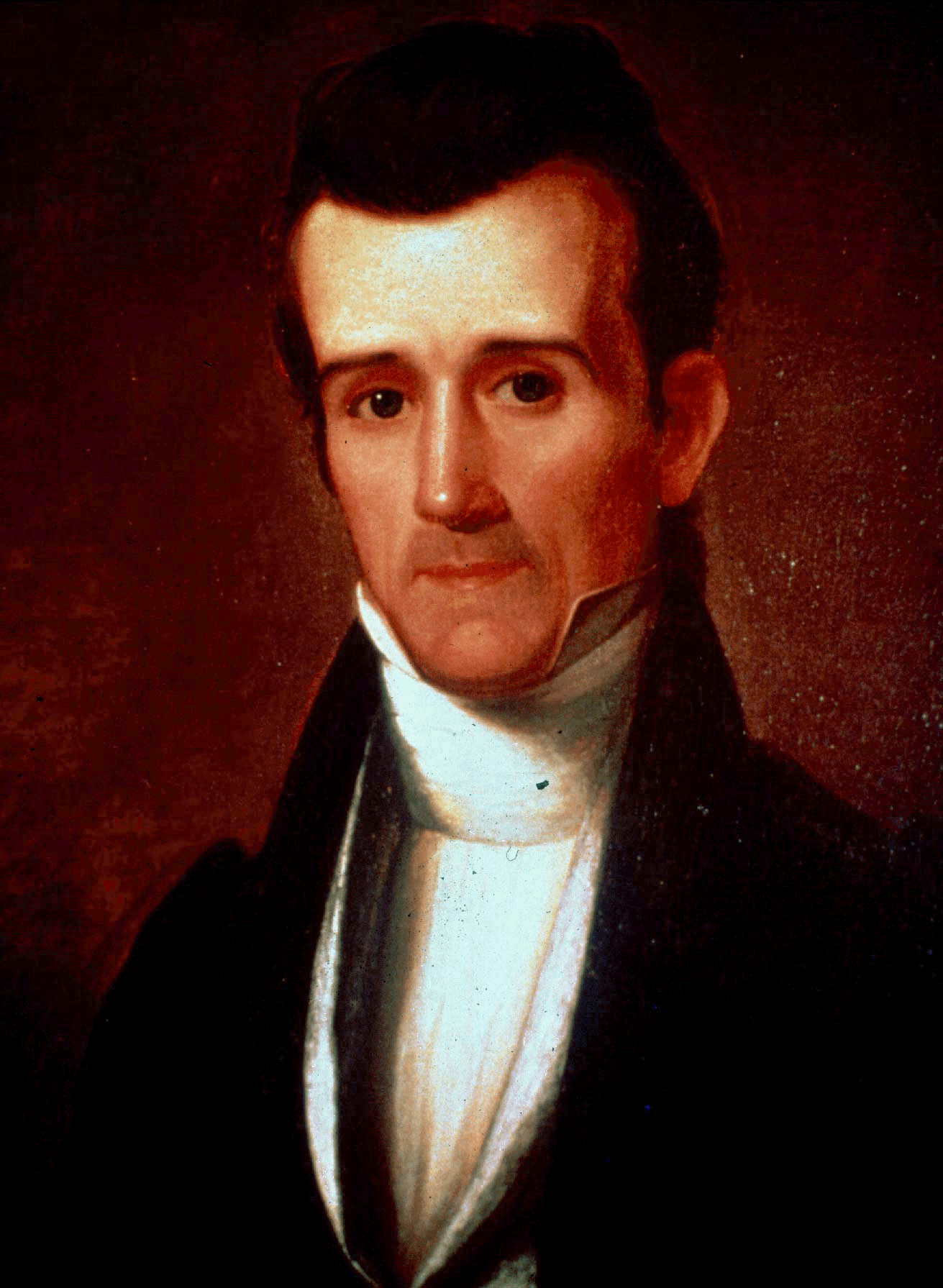

Bernie Sanders, a self-styled progressive and contender for the Democratic presidential nomination in 2016. Pictured here in 2014.

The United States is in an election year with public confidence in government sinking – 2014 and 2015 Gallup polls show confidence in Congress at all-time lows.[1] Voters and pundits are engaged in bitter battles over the meaning of left and right, with the politically charged term “progressive” used and abused by voices across the political spectrum. Bernie Sanders and Hillary Clinton, the leading Democratic Party candidates, both wear it as a badge of honor. But this term is often used but little understood. During Barack Obama’s first presidential term, one left-leaning history professor described a progressive as anyone “who believes that social problems have systemic causes and that governmental power can be used to solve those problems.”

Progressivism has an ugly history, too. The side of the Progressive Era the American left would rather forget is dredged up by Princeton University Scholar Thomas C. Leonard in Illiberal Reformers: Race, Eugenics and American Economics in the Progressive Era. In a scathing criticism of the American Progressive Era Leonard emphasizes the movement’s rejection of racial equality, individualism, and natural rights. Progressivism was inspired by the torrent of economic growth and urbanization that was late nineteenth century America. Mass-scale industrialization had turned the autonomous individual into a relic. “Society shaped and made the individual rather than the other way around,” writes Leonard. “The only question was who shall do the shaping and molding” (p. 23). Naturally, the progressives chose themselves for that task.

Much of the book is devoted to eugenics. Defined as efforts to improve human heredity through selective breeding, the now-defunct pseudoscience was a pillar of early 20th century progressivism. Leonard argues that eugenics fit snugly into the movement’s faith in social control, economic regulation, and Darwinism (p. 88). But Darwin was ambiguous on whether natural selection resulted in not only change but also progress. This gave progressive biologists and social scientists a chance to exercise their self-styled expertise. Random genetic variance and the survival of inferior traits is useless; what’s needed is social selection, reproduction managed from above to ensure proliferation of the fit and removal of the unfit (p. 106). Experts could expose undesirables and remove them from the gene pool. Forced sterilization and racial immigration quotas were popular methods.

The book’s most memorable chapter is where it analyzes minimum wage legislation. These days, this novelty of the administrative state is taken for granted – many on the left currently argue that raising the wage floor doesn’t destroy jobs – but Leonard finds its roots in Progressive Era biases against market exchange, immigrants, and racial minorities. Assuming that employers always hire the lowest-cost candidates and that non-Anglo-Saxon migrants (as a function of their inferior race) always underbid the competition, certain progressives undertook to push them out of the labor market. Their tool was the minimum wage. Writes Leonard:

The economists among labor reformers well understood that a minimum wage, as a wage floor, caused unemployment, while alternative policy options, such as wage subsidies for the working poor, could uplift unskilled workers without throwing the least skilled out of work … Eugenically minded economists such as [Royal] Meeker preferred the minimum wage to wage subsidies not in spite of the unemployment the minimum wage caused but because of it (p. 163).

In the hands of a lesser author, this book could have been a partisan attack on American liberalism, and one that would find a welcoming audience in the current political landscape. Leonard deftly stands above the left-right fray. Rather than give ammunition to the right he argues that progressivism attracted people from both ends of the political spectrum. Take Teddy Roosevelt, a social conservative and nationalist who nonetheless used the presidency to promote a progressive agenda. “Right progressives, no less than left progressives were illiberal, glad to subordinate individual rights to their reading of the common good. American conservative thinking was never especially antistatist”, Leonard writes (p. 39). Furthermore, eugenics had followers among progressives, conservatives, and socialists alike. The true enemy of progressivism? Classical liberalism, the belief that society is a web of interactions between individuals and not a collective “social organism.”

Insights for today?

Leonard combines rigorous research with lucid writing, presenting a work that is intellectually sound, relevant, and original. Readers should take his insights to heart when asking how much of the Progressive Era still lives in 2016. The answer is not simple. Contemporary progressives like Clinton and Sanders certainly don’t espouse biological racism. For those who whip up anti-immigrant sentiment to win votes, “progressive” is a dirty word, not a badge of honor. Moreover, the American left long ago abandoned attempts to control the economy via technocratic experts.

But that doesn’t tell the whole story. Modern progressives still place a disturbing amount of faith in the administrative state and a lack of it in market exchange. Leonard closes by arguing that the Progressive Era lives on: “Progressivism reconstructed American liberalism by dismantling the free market of classical liberalism and erecting in its place the welfare state of modern liberalism.” (p. 191). It is up to the reader to decide whether that is something to be lauded or fought against.

Did you find the article interesting? If so, share it with others by clicking on one of the buttons below.

You can buy the book Illiberal Reformers: Race, Eugenics and American Economics in the Progressive Era, by Thomas C. Leonard here: Amazon US | Amazon UK

Joseph Larsen is a political scientist and journalist based in Tbilisi, Georgia. He writes about the pressing issues of today, yesterday, and tomorrow. You can follow him on Twitter @JosephLarsen2.