The pocket diary of Rifleman William Eve of 1/16th (County of London) Battalion (Queen’s Westminster Rifles):

”Poured with rain all day and night. Water rose steadily till knee deep when we had the order to retire to our trenches. Dropped blanket and fur coat in the water. Slipped down as getting up on parapet, got soaked up to my waist. Went sand-bag filling and then sewer guard for 2 hours. Had no dug out to sleep in, so had to chop and change about. Roache shot while getting water and [Rifleman PH] Tibbs shot while going to his aid (in the mouth). Laid in open all day, was brought in in the evening”, unconscious but still alive. Passed away soon after.

The war caused 350,000 total American casualties, of which over 117,000 were deaths. The best estimates today are 53,000 combat deaths, and 64,000 deaths from disease. (Official figures in 1919 were 107,000 total, with 50,000 combat deaths, and 57,000 deaths from disease.) About half of the latter were from the great influenza epidemic, 1918-1920. Considering that 4,450,000 men were mobilized, and half those were sent to Europe, the figure is far less than the casualty rates suffered by all of the other combatants.

World War 1 represented the coming of age of American military medicine. The techniques and organizational principles of the Great War were greatly different from any earlier wars and were far more advanced. Medical and surgical techniques, in contrast with previous wars, represented the best available in civilian medicine at the time. Indeed, many of the leaders of American medicine were found on the battlefields of Europe in 1917 and 1918. The efforts to meet the challenge were often hurried. The results lacked polish and were far from perfect. But the country can rightly be proud of the medical efforts made during the Great War.

The primary medical challenges for the U.S. upon entering the war were, “creating a fit force of four million people, keeping them healthy and dealing with the wounded,” says the museum's curator of medicine (Smithsonian's National Museum of American History and science) Diane Wendt. “Whether it was moving them through a system of care to return them to the battlefield or take them out of service, we have a nation that was coming to grips with that.”

The First World War created thousands of casualties. New weapons such as the machine gun caused unprecedented damage to soldiers’ bodies. This presented new challenges to doctors on both sides in the conflict, as they sought to save their patients’ lives and limit the harm to their bodies. New types of treatment, organization and medical technologies were developed to reduce the number of deaths.

In addition to wounds, many soldiers became ill. Weakened immune systems and the presence of contagious disease meant that many men were in hospital for sickness, not wounds. Between October 1914 and May 1915 at the No 1 Canadian General Hospital, there were 458 cases of influenza and 992 of gonorrhea amongst officers and men.

Wounding also became a way for men to avoid the danger and horror of the trenches. Doctors were instructed to be vigilant in cases of ‘malingering’, where soldiers pretended to be ill or wounded themselves so that they did not have to fight. It was a common belief of the medical profession that wounds on the left hand were suspicious.

Wounding was not always physical. Thousands of men suffered emotional trauma from their war experience. ‘Shellshock’, as it came to be known, was viewed with suspicion by the War Office and by many doctors, who believed that it was another form of weakness or malingering. Sufferers were treated at a range of institutions.

Organization of Battlefield Medical Care

In response to the realities of the Western Front in Europe, the Medical Department established a treatment and evacuation system that could function in both static and mobile environments. Based on their history of success in the American Civil War, and on the best practices of the French and British systems, the Department created specific units designed to provide a sequence of continuous care from the front line to the rear area in what they labelled the Theater of Operations.

Casualties had to be taken from the field of battle to the places where doctors and nurses could treat them. They were collected by stretcher-bearers and moved by a combination of people, horse and cart, and later on by motorized ambulance ‘down the line’. Men would be moved until they reached a location where treatment for their specific injury would take place.

Where soldiers ended up depended largely on the severity of their wounds. Owing to the number of wounded, hospitals were set up in any available buildings, such as abandoned chateaux in France. Often Casualty Clearing Stations (CCS) were set up in tents. Surgery was often performed at the CCS; arms and legs were amputated, and wounds were operated on. As the battlefield became static and trench warfare set in, the CCS became more permanent, with better facilities for surgery and accommodation for female nurses, which was situated far away from the male patients.

Combat Related Injuries

For World War I, ideas of the front lines entered the popular imagination through works as disparate as All Quiet on the Western Front and Blackadder. The strain and the boredom of trench warfare are part of our collective memory; the drama of war comes from two sources: mustard gas and machine guns. The use of chemical weapons and the mechanization of shooting brought horror to men’s lives at the front. Yet they were not the greatest source of casualties. By far, artillery was the biggest killer in World War I, and provided the greatest source of war wounded.

World War I was an artillery war. In his book Trench: A History of Trench Warfare on the Western Front (2010), Stephen Bull concluded that in the western front, artillery was the biggest killer, responsible for “two-thirds of all deaths and injuries.” Of this total, a third resulted in death, two-thirds in injuries. Artillery wounded the whole body, if not entirely obliterated, the body was often dismembered, losing arms, legs, ears, noses, and even faces. Even when there was not superficial damage, concussive injuries and “shell shock” put many men out of action. Of course, shooting—in combat as well as from snipers—was another great source of wounding. Gas attacks were a third. Phosgene, chlorine, mustard gas, and tear gas debilitated more than killed, though many ended up suffering long-term disability. Overall, the war claimed about 10M military dead, and about 20M–21M military wounded, with 5% of those wounds’ life-debilitating, that is, about a million persons.

August 1914 would dramatically alter the paradigm of casualty care. Gigantic cannons, high explosives, and the machine gun soon invalidated all pre-war suppositions and strategy. More than eighty percent of wounds were due to shell fragments, which caused multiple shredding injuries. "There were battles which were almost nothing but artillery duels," a chagrined Edmond Delorme observed. Mud and manured fields took care of the rest. Devitalized tissue was quickly occupied by Clostridia pathogens, and gas gangrene became a deadly consequence. Delays in wound debridement, prompted by standard military practice, caused astounding lethality. Some claimed more than fifty percent of deaths were due to negligent care. And the numbers of casualties were staggering. More than 200,000 were wounded in the first months alone: far too many for the outdated system of triage and evacuation envisioned just years before. American observer Doctor Edmund Gros visited the battlefield in 1914:

If a soldier is wounded in the open, he falls on the firing line and tries to drag himself to some place of safety. Sometimes the fire of the enemy is so severe that he cannot move a step. Sometimes, he seeks refuge behind a haystack or in some hollow or behind some knoll…. Under the cover of darkness, those who can do so walk with or without help to the Poste de Secours. . . . Stretcher-bearers are sent out to collect the severely wounded . . . peasants' carts and wagons [are used] . . . the wounded are placed on straw spread on the bottom of these carts without springs, and thus they are conveyed during five or six hours before they reach the sanitary train or temporary field hospital. What torture many of them must endure, especially those with multiple fractures!

Non-Combat Related Death and Illness

In the course of the First World War, many more soldiers died of disease than by the efforts of the enemy. Lice caused itching and transmitted infections such as typhus and trench fever. In summer it was impossible to keep food fresh and everyone got food poisoning. In winter men suffered from frostbite and exposure and from trench foot. There were no antibiotics so death from gangrenous wounds and syphilis were common. Others suicided as a result of psychological stress.

Battlefield Wounded and Surgery

In the early years of the war, compound lower limb fractures caused by gunshots in trench warfare sparked debate over the traditional splinting practices that delayed surgery, leading to high mortality rates, particularly for open femoral fractures.

Femoral fractures stranded soldiers on the battlefield, and stretcher-bearers reached them only with difficulty, leaving many lying wounded for days or enduring rough transport, all of which left soldiers particularly vulnerable to gas gangrene and secondary hemorrhage. Australian surgeons in France reported injury-to-treatment times ranging from 36 hours to a week and averaging three to four days. Fracture immobilization during transport was poor, and in the early war years surgeons reported about 80% mortality for soldiers with femoral fractures transported from the field.

By 1915 medics and stretcher-bearers were routinely trained to apply immobilizing splints, and by 1917 specialized femur wards had been established; during this period mortality from all fractures fell to about 12% and below 20% for open femoral fractures.

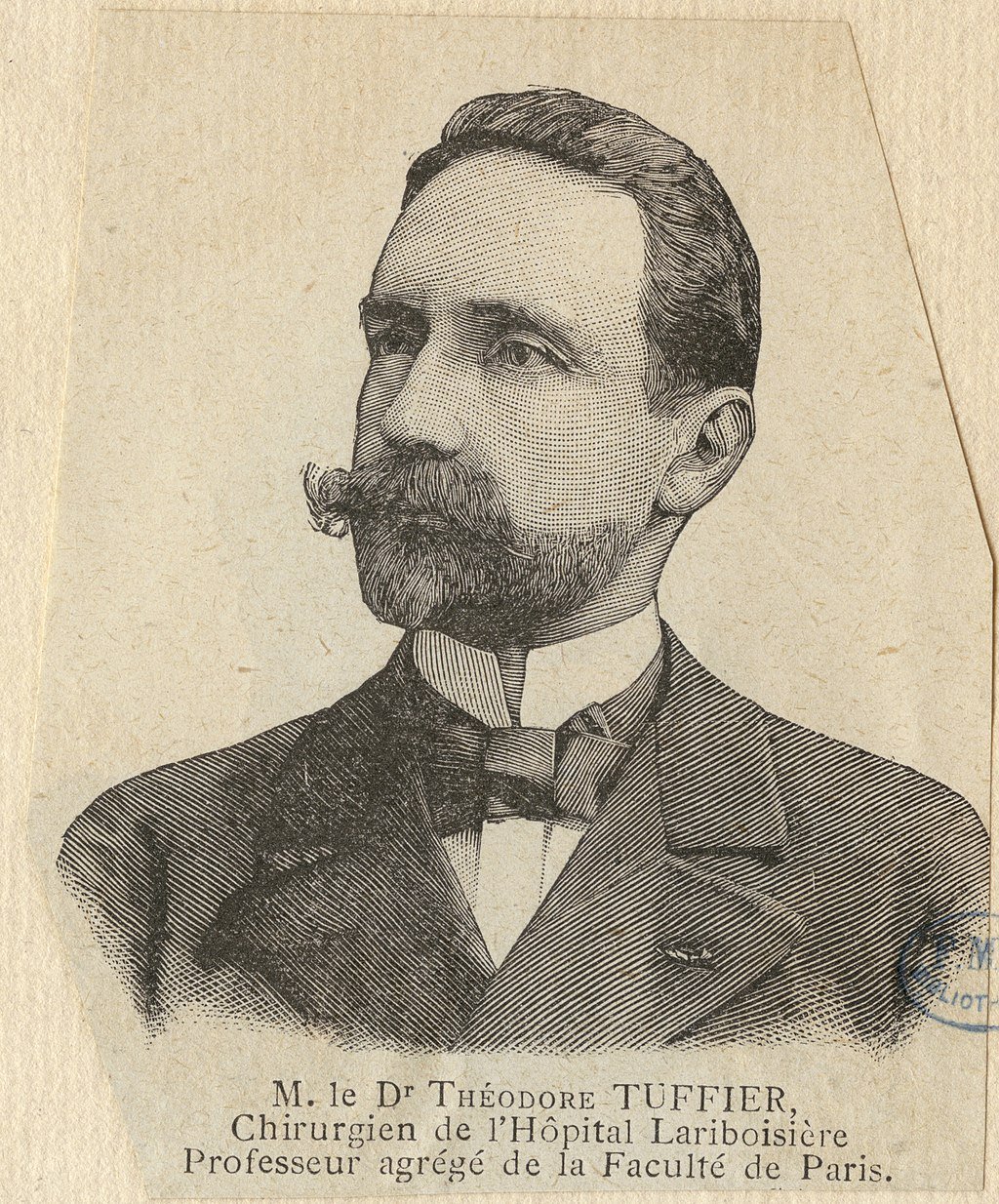

Théodore Tuffier, a leading French surgeon, testified in 1915 to the Academy of Medicine that 70 percent of amputations were due to infection, not to the initial injury. “Professor Tuffier stated that antiseptics had not proven satisfactory, that cases of gas gangrene were most difficult to handle,” Crile wrote. “All penetrating wounds of the abdomen, he said, die of shock and infection. … He himself tried in fifteen instances to perform immediate operations in cases of penetrating abdominal wounds, and he lost every case. In fact, they have abandoned any attempt to operate penetrating wounds of the abdomen. All wounds large and small are infected. The usual antiseptics, bichloride, carbolic, iodine, etc., fail.”

Every war has its distinctive injury. For World War I, it was facial injuries, which affected 10–15% of casualties, or over a half-million men. The nature of combat--with faces often exposed above the trench line--contributed to this high incidence. Most countries founded specialist hospitals with surgeons like Johannes Esser in the Netherlands and Hippolyte Morestin in France who dedicated their practices to developing new techniques to repair facial trauma.

World War I presented surgeons with myriad new challenges. They responded to these difficulties not only with courage and sedulity but also with an open mind and active investigation. Military medicine practiced in 1918 differed substantially from that in 1914. This shift did not occur by happenstance. It represented collaboration between some of the brightest minds in academia and professional military doctors, combining their expertise to solve problems, take care of patients, and preserve fighting strength. It required multiple inter-allied conferences both to identify common medical problems and also to determine optimal solutions. Reams of books and pamphlets buttressed the in-person instruction consultants provided to educate young physicians on best practices. Most significantly, this change demanded a willingness to admit a given intervention was not working, creatively try something new, assess its efficacy using data from thousands of soldiers, disseminate the knowledge, and ensure widespread application of the novel practice. No step was easy, and combining execute them while fighting the Great War required a remarkable degree of perseverance, intellectual honesty, and operational flexibility.

Medical advances and improvements leading up to World War 2

With most of the fighting set in the trenches of Europe and with the unexpected length of the war, soldiers were often malnourished, exposed to all weather conditions, sleep-deprived, and often knee-deep in the mud along with the bodies of men and animals. In the wake of the mass slaughter, it became clear that the “only way to cope with the sheer numbers of casualties was to have an efficient administrative system that identified and prioritized injuries as they arrived.” This was the birth of the Triage System. Medicine, in World War I, made major advances in several directions. The war is better known as the first mass killing of the 20th century—with an estimated 10 million military deaths alone—but for the injured, doctors learned enough to vastly improve a soldier’s chances of survival. They went from amputation as the only solution, to being able to transport soldiers to hospital, to disinfect their wounds and to operate on them to repair the damage wrought by artillery. Ambulances, antiseptics, and anesthesia, three elements of medicine taken entirely for granted today, emerged from the depths of suffering in the First World War.

Two Welshmen were responsible for one of the most important advances - the Thomas splint - which is still used in war zones today. It was invented in the late 19th century by pioneering surgeon Hugh Owen Thomas, often described as the father of British orthopedics, born in Anglesey to a family of "bone setters”.

In France, vehicles were commandeered to become mobile X-ray units. New antiseptics were developed to clean wounds, and soldiers became more disciplined about hygiene. Also, because the sheer scale of the destruction meant armies had to become better organized in looking after the wounded, surgeons were drafted in closer to the frontline and hospital trains used to evacuate casualties.

When the war broke out, the making of prosthetic limbs was a small industry in Britain. Production had to increase dramatically. One of the ways this was achieved was by employing men who had amputations to make prosthetic limbs – most commonly at Erskine and Roehampton, where they learnt the trade alongside established tradespeople. This had the added advantage of providing occupation for discharged soldiers who, because of their disabilities, would probably have had difficulty finding work.

While it was not an innovation of war, the process of blood transfusion was greatly refined during World War I and contributed to medical progress. Previously, all blood stored near the front lines was at risk of clotting. Anticoagulant methods were implemented, such as adding citrate or using paraffin inside the storage vessel. This resulted in blood being successfully stored for an average of 26 days, simplifying transportation. The storage and maintenance of blood meant that by 1918 blood transfusions were being used in front-line casualty clearing stations (CCS). Clearing stations were medical facilities that were positioned just out of enemy fire.

One of the most profound medical advancements resulting from World War I was the exploration of mental illness and trauma. Originally, any individual showing symptoms of neurosis was immediately sent to an asylum and consequently forgotten. As World War I made its debut, it brought forward a new type of warfare that no one was prepared for in its technological, military, and biological advances.

Another successful innovation came in the form of the base hospitals and clearing stations. These allowed doctors and medics to categorize men as serious or mild, and results came to light that many stress-related disorders were a result of

exhaustion or deep trauma. “Making these distinctions was a breakthrough…the new system meant that mild cases could be rested then returned to their posts without being sent home.”

What do you think of trauma during World War I? Let us know below.

Now read Richard’s piece on the history of slavery in New York here.The pocket diary of Rifleman William Eve of 1/16th (County of London) Battalion (Queen’s Westminster Rifles):

”Poured with rain all day and night. Water rose steadily till knee deep when we had the order to retire to our trenches. Dropped blanket and fur coat in the water. Slipped down as getting up on parapet, got soaked up to my waist. Went sand-bag filling and then sewer guard for 2 hours. Had no dug out to sleep in, so had to chop and change about. Roache shot while getting water and [Rifleman PH] Tibbs shot while going to his aid (in the mouth). Laid in open all day, was brought in in the evening”, unconscious but still alive. Passed away soon after.

The war caused 350,000 total American casualties, of which over 117,000 were deaths. The best estimates today are 53,000 combat deaths, and 64,000 deaths from disease. (Official figures in 1919 were 107,000 total, with 50,000 combat deaths, and 57,000 deaths from disease.) About half of the latter were from the great influenza epidemic, 1918-1920. Considering that 4,450,000 men were mobilized, and half those were sent to Europe, the figure is far less than the casualty rates suffered by all of the other combatants.

World War 1 represented the coming of age of American military medicine. The techniques and organizational principles of the Great War were greatly different from any earlier wars and were far more advanced. Medical and surgical techniques, in contrast with previous wars, represented the best available in civilian medicine at the time. Indeed, many of the leaders of American medicine were found on the battlefields of Europe in 1917 and 1918. The efforts to meet the challenge were often hurried. The results lacked polish and were far from perfect. But the country can rightly be proud of the medical efforts made during the Great War.

The primary medical challenges for the U.S. upon entering the war were, “creating a fit force of four million people, keeping them healthy and dealing with the wounded,” says the museum's curator of medicine (Smithsonian's National Museum of American History and science) Diane Wendt. “Whether it was moving them through a system of care to return them to the battlefield or take them out of service, we have a nation that was coming to grips with that.”

The First World War created thousands of casualties. New weapons such as the machine gun caused unprecedented damage to soldiers’ bodies. This presented new challenges to doctors on both sides in the conflict, as they sought to save their patients’ lives and limit the harm to their bodies. New types of treatment, organization and medical technologies were developed to reduce the number of deaths.

In addition to wounds, many soldiers became ill. Weakened immune systems and the presence of contagious disease meant that many men were in hospital for sickness, not wounds. Between October 1914 and May 1915 at the No 1 Canadian General Hospital, there were 458 cases of influenza and 992 of gonorrhea amongst officers and men.

Wounding also became a way for men to avoid the danger and horror of the trenches. Doctors were instructed to be vigilant in cases of ‘malingering’, where soldiers pretended to be ill or wounded themselves so that they did not have to fight. It was a common belief of the medical profession that wounds on the left hand were suspicious.

Wounding was not always physical. Thousands of men suffered emotional trauma from their war experience. ‘Shellshock’, as it came to be known, was viewed with suspicion by the War Office and by many doctors, who believed that it was another form of weakness or malingering. Sufferers were treated at a range of institutions.

Organization of Battlefield Medical Care

In response to the realities of the Western Front in Europe, the Medical Department established a treatment and evacuation system that could function in both static and mobile environments. Based on their history of success in the American Civil War, and on the best practices of the French and British systems, the Department created specific units designed to provide a sequence of continuous care from the front line to the rear area in what they labelled the Theater of Operations.

Casualties had to be taken from the field of battle to the places where doctors and nurses could treat them. They were collected by stretcher-bearers and moved by a combination of people, horse and cart, and later on by motorized ambulance ‘down the line’. Men would be moved until they reached a location where treatment for their specific injury would take place.

Where soldiers ended up depended largely on the severity of their wounds. Owing to the number of wounded, hospitals were set up in any available buildings, such as abandoned chateaux in France. Often Casualty Clearing Stations (CCS) were set up in tents. Surgery was often performed at the CCS; arms and legs were amputated, and wounds were operated on. As the battlefield became static and trench warfare set in, the CCS became more permanent, with better facilities for surgery and accommodation for female nurses, which was situated far away from the male patients.

Combat Related Injuries

For World War I, ideas of the front lines entered the popular imagination through works as disparate as All Quiet on the Western Front and Blackadder. The strain and the boredom of trench warfare are part of our collective memory; the drama of war comes from two sources: mustard gas and machine guns. The use of chemical weapons and the mechanization of shooting brought horror to men’s lives at the front. Yet they were not the greatest source of casualties. By far, artillery was the biggest killer in World War I, and provided the greatest source of war wounded.

World War I was an artillery war. In his book Trench: A History of Trench Warfare on the Western Front (2010), Stephen Bull concluded that in the western front, artillery was the biggest killer, responsible for “two-thirds of all deaths and injuries.” Of this total, a third resulted in death, two-thirds in injuries. Artillery wounded the whole body, if not entirely obliterated, the body was often dismembered, losing arms, legs, ears, noses, and even faces. Even when there was not superficial damage, concussive injuries and “shell shock” put many men out of action. Of course, shooting—in combat as well as from snipers—was another great source of wounding. Gas attacks were a third. Phosgene, chlorine, mustard gas, and tear gas debilitated more than killed, though many ended up suffering long-term disability. Overall, the war claimed about 10M military dead, and about 20M–21M military wounded, with 5% of those wounds’ life-debilitating, that is, about a million persons.

August 1914 would dramatically alter the paradigm of casualty care. Gigantic cannons, high explosives, and the machine gun soon invalidated all pre-war suppositions and strategy. More than eighty percent of wounds were due to shell fragments, which caused multiple shredding injuries. "There were battles which were almost nothing but artillery duels," a chagrined Edmond Delorme observed. Mud and manured fields took care of the rest. Devitalized tissue was quickly occupied by Clostridia pathogens, and gas gangrene became a deadly consequence. Delays in wound debridement, prompted by standard military practice, caused astounding lethality. Some claimed more than fifty percent of deaths were due to negligent care. And the numbers of casualties were staggering. More than 200,000 were wounded in the first months alone: far too many for the outdated system of triage and evacuation envisioned just years before. American observer Doctor Edmund Gros visited the battlefield in 1914:

If a soldier is wounded in the open, he falls on the firing line and tries to drag himself to some place of safety. Sometimes the fire of the enemy is so severe that he cannot move a step. Sometimes, he seeks refuge behind a haystack or in some hollow or behind some knoll…. Under the cover of darkness, those who can do so walk with or without help to the Poste de Secours. . . . Stretcher-bearers are sent out to collect the severely wounded . . . peasants' carts and wagons [are used] . . . the wounded are placed on straw spread on the bottom of these carts without springs, and thus they are conveyed during five or six hours before they reach the sanitary train or temporary field hospital. What torture many of them must endure, especially those with multiple fractures!

Non-Combat Related Death and Illness

In the course of the First World War, many more soldiers died of disease than by the efforts of the enemy. Lice caused itching and transmitted infections such as typhus and trench fever. In summer it was impossible to keep food fresh and everyone got food poisoning. In winter men suffered from frostbite and exposure and from trench foot. There were no antibiotics so death from gangrenous wounds and syphilis were common. Others suicided as a result of psychological stress.

Battlefield Wounded and Surgery

In the early years of the war, compound lower limb fractures caused by gunshots in trench warfare sparked debate over the traditional splinting practices that delayed surgery, leading to high mortality rates, particularly for open femoral fractures.

Femoral fractures stranded soldiers on the battlefield, and stretcher-bearers reached them only with difficulty, leaving many lying wounded for days or enduring rough transport, all of which left soldiers particularly vulnerable to gas gangrene and secondary hemorrhage. Australian surgeons in France reported injury-to-treatment times ranging from 36 hours to a week and averaging three to four days. Fracture immobilization during transport was poor, and in the early war years surgeons reported about 80% mortality for soldiers with femoral fractures transported from the field.

By 1915 medics and stretcher-bearers were routinely trained to apply immobilizing splints, and by 1917 specialized femur wards had been established; during this period mortality from all fractures fell to about 12% and below 20% for open femoral fractures.

Théodore Tuffier, a leading French surgeon, testified in 1915 to the Academy of Medicine that 70 percent of amputations were due to infection, not to the initial injury. “Professor Tuffier stated that antiseptics had not proven satisfactory, that cases of gas gangrene were most difficult to handle,” Crile wrote. “All penetrating wounds of the abdomen, he said, die of shock and infection. … He himself tried in fifteen instances to perform immediate operations in cases of penetrating abdominal wounds, and he lost every case. In fact, they have abandoned any attempt to operate penetrating wounds of the abdomen. All wounds large and small are infected. The usual antiseptics, bichloride, carbolic, iodine, etc., fail.”

Every war has its distinctive injury. For World War I, it was facial injuries, which affected 10–15% of casualties, or over a half-million men. The nature of combat--with faces often exposed above the trench line--contributed to this high incidence. Most countries founded specialist hospitals with surgeons like Johannes Esser in the Netherlands and Hippolyte Morestin in France who dedicated their practices to developing new techniques to repair facial trauma.

World War I presented surgeons with myriad new challenges. They responded to these difficulties not only with courage and sedulity but also with an open mind and active investigation. Military medicine practiced in 1918 differed substantially from that in 1914. This shift did not occur by happenstance. It represented collaboration between some of the brightest minds in academia and professional military doctors, combining their expertise to solve problems, take care of patients, and preserve fighting strength. It required multiple inter-allied conferences both to identify common medical problems and also to determine optimal solutions. Reams of books and pamphlets buttressed the in-person instruction consultants provided to educate young physicians on best practices. Most significantly, this change demanded a willingness to admit a given intervention was not working, creatively try something new, assess its efficacy using data from thousands of soldiers, disseminate the knowledge, and ensure widespread application of the novel practice. No step was easy, and combining execute them while fighting the Great War required a remarkable degree of perseverance, intellectual honesty, and operational flexibility.

Medical advances and improvements leading up to World War 2

With most of the fighting set in the trenches of Europe and with the unexpected length of the war, soldiers were often malnourished, exposed to all weather conditions, sleep-deprived, and often knee-deep in the mud along with the bodies of men and animals. In the wake of the mass slaughter, it became clear that the “only way to cope with the sheer numbers of casualties was to have an efficient administrative system that identified and prioritized injuries as they arrived.” This was the birth of the Triage System. Medicine, in World War I, made major advances in several directions. The war is better known as the first mass killing of the 20th century—with an estimated 10 million military deaths alone—but for the injured, doctors learned enough to vastly improve a soldier’s chances of survival. They went from amputation as the only solution, to being able to transport soldiers to hospital, to disinfect their wounds and to operate on them to repair the damage wrought by artillery. Ambulances, antiseptics, and anesthesia, three elements of medicine taken entirely for granted today, emerged from the depths of suffering in the First World War.

Two Welshmen were responsible for one of the most important advances - the Thomas splint - which is still used in war zones today. It was invented in the late 19th century by pioneering surgeon Hugh Owen Thomas, often described as the father of British orthopedics, born in Anglesey to a family of "bone setters”.

In France, vehicles were commandeered to become mobile X-ray units. New antiseptics were developed to clean wounds, and soldiers became more disciplined about hygiene. Also, because the sheer scale of the destruction meant armies had to become better organized in looking after the wounded, surgeons were drafted in closer to the frontline and hospital trains used to evacuate casualties.

When the war broke out, the making of prosthetic limbs was a small industry in Britain. Production had to increase dramatically. One of the ways this was achieved was by employing men who had amputations to make prosthetic limbs – most commonly at Erskine and Roehampton, where they learnt the trade alongside established tradespeople. This had the added advantage of providing occupation for discharged soldiers who, because of their disabilities, would probably have had difficulty finding work.

While it was not an innovation of war, the process of blood transfusion was greatly refined during World War I and contributed to medical progress. Previously, all blood stored near the front lines was at risk of clotting. Anticoagulant methods were implemented, such as adding citrate or using paraffin inside the storage vessel. This resulted in blood being successfully stored for an average of 26 days, simplifying transportation. The storage and maintenance of blood meant that by 1918 blood transfusions were being used in front-line casualty clearing stations (CCS). Clearing stations were medical facilities that were positioned just out of enemy fire.

One of the most profound medical advancements resulting from World War I was the exploration of mental illness and trauma. Originally, any individual showing symptoms of neurosis was immediately sent to an asylum and consequently forgotten. As World War I made its debut, it brought forward a new type of warfare that no one was prepared for in its technological, military, and biological advances.

Another successful innovation came in the form of the base hospitals and clearing stations. These allowed doctors and medics to categorize men as serious or mild, and results came to light that many stress-related disorders were a result of

exhaustion or deep trauma. “Making these distinctions was a breakthrough…the new system meant that mild cases could be rested then returned to their posts without being sent home.”

What do you think of trauma during World War I? Let us know below.

Now read Richard’s piece on the history of slavery in New York here.